ambf在2023-04-19,美國辦了半天workshop,在模擬上有提供colab上跑,但這是影像segmentation,而不是CRTK自動化

在Ubuntu 20.04上先裝好ROS Noetic,如果有自己的GPU,依序裝ambf,他提供的data,以及跑影像segmentation.

首先是(A)裝ambf(其實跟之前一樣)

# Install ambf dependencies

sudo apt-get install libasound2-dev libgl1-mesa-dev xorg-dev

sudo apt-get install ros-noetic-cv-bridge ros-noetic-image-transport

source /opt/ros/noetic/setup.bash #source ros libraries

git clone https://github.com/WPI-AIM/ambf

cd ambf

mkdir build

cd build

cmake ..

make -j7

之後修改.bashrc檔

gedit ~/.bashrc #open bashrc

插入

activate_ros_env(){

source /opt/ros/noetic/setup.bash #ROS

export PATH=$PATH:~/ambf/bin/lin-x86_64 #AMBF

source ~/ambf/build/devel/setup.bash #AMBF

}

alias ros="activate_ros_env"

記得source一次

. ~/.bashrc

然後開2個terminal,一個執行

ros && roscore

另一個執行

ros && ambf_simulator

之後(B)下載他提供的data

ros ##Assuming you setup the alias in the previous step

cd ~

git clone https://github.com/jabarragann/surgical_robotics_challenge.git

cd surgical_robotics_challenge/scripts

pip install -e .

python -c "import surgical_robotics_challenge; print(surgical_robotics_challenge.__file__)"

cd ~/surgical_robotics_challenge #cd <path-surgical-robotics-challenge>

./run_environment_3d_med.sh

最後(C)跑影像segmentation

cd ~

git clone https://github.com/Accelnet-project-repositories/dVRK-segmentation-models.git

cd dVRK-segmentation-models

pip install -e . -r requirements_basic.txt --user

echo 'export PATH=$PATH:$HOME/.local/bin' >> ~/.bashrc # Add your local bin to path

記得再source一次

. ~/.bashrc

surg_seg_ros_video_record --help

git clone https://github.com/dayon95/AMBFSegmentation.git

然後跑程式![]()

不過最後的這個colab的python的Jupyiter notebook檔案,好像可以直接在Colab跑,完全不須Ubuntu 20.04+ROS Noetic耶,結果前面(A)(B)(C)似乎都是多餘![]()

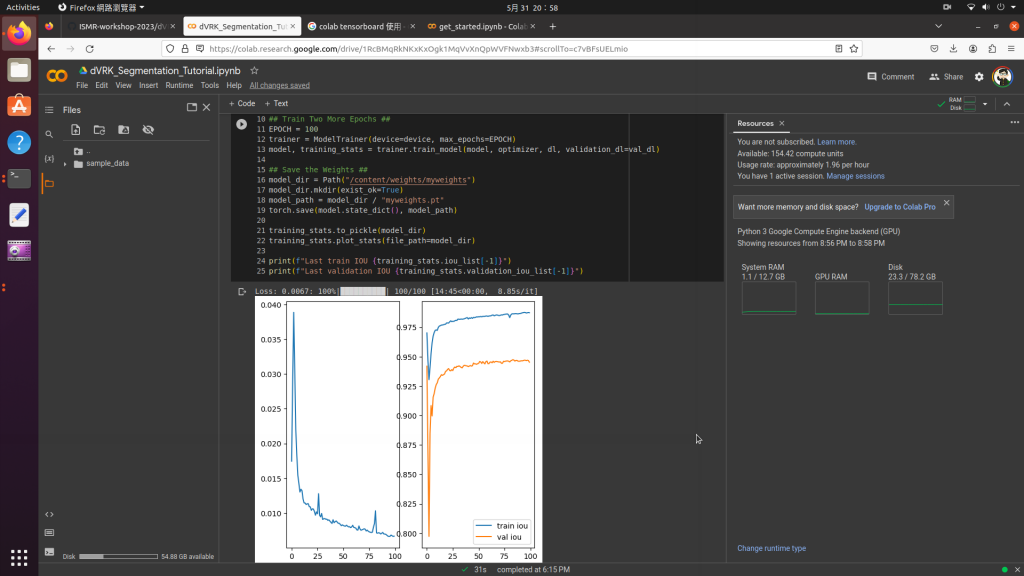

原本程式是使用training 40 epoches再多2次,我把它加到100,觀察大概要50 epochesr就會收斂